Hello Everyone,

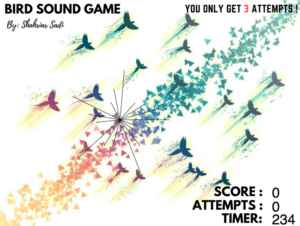

For my final project, I made a video game about NYU. The game is titled The Game of Life: NYU.

I made it using 4 different libraries:

P5 Dom

P5 Sound

P5 Scene Manager

P5 Gif

For the project itself the concept is as follows:

You’ve been accepted to college, you are now part of the millions of people around the world that spend hours studying for an exam every week. Now that you are part of this community, your task is to graduate. During the path to this goal, you will encounter many challenges and rewards. You will meet people, join clubs, receive internship opportunities, eat food but you will also make bad decisions, pay tuition and you might even have crisis regarding your major.

All of this will take place in the context of NYU. This is a game that will have a total of 5 levels. Levels will be ordered like college (Freshman Year, Sophomore Year etc.) The final level takes place after completing the senior year level. This will be a boss level where you will face the boss Andy Hamilton to receive your diploma. Once you receive it, you beat the game.

For the game these are the controls:

Left and Right for the Player movement

> Space Bar for to pay tuition

> For Game Over:

> 1 to reset game

> For Intro:

> 1 to start game

> 2 to go into options

> For Options:

> 1 to go back

> Sliders to adjust volume

Sprites of the game:

![]()

What I have learned:

I’ve learned that spending time on code is actually really fun to do. As a CS Major, I got the opportunity to explore the realm outside of what the CS department gives me. I have learned about working with different libraries, and APIs. I have learned about coding in javascript and in java while also picking up processing as a skill along the way.

For the project itself, I have learned that collision detection is one of the most important aspects of game development. Although most of my collision detection is 2d, spending time making a video game has given me the opportunity to break down a project into a variety of different sections. I have also learned that working with the gif library was difficult. The end product, unfortunately, will not use any gifs.

Here is a link to the game:

www.openprocessing.org/sketch/543417

If you guys have any questions about the code, please let me know. The comments I left can hopefully guide you.

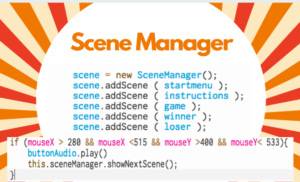

I created the game solely in p5js, I chose to use p5js over processing in Java is because of the libraries in p5js, the most important of which was scene manager. Scene manager allowed me to create a start menu, and instruction menu, a game function, winner and looser screens. Using scene manager I was easily able to switch to those scene’s and not have one scenes code effect others. Below I have attached an image of some snippets of the scene manager code. I also had various menus with buttons which would lead to new scenes and having scene manager really made the process easier.

I created the game solely in p5js, I chose to use p5js over processing in Java is because of the libraries in p5js, the most important of which was scene manager. Scene manager allowed me to create a start menu, and instruction menu, a game function, winner and looser screens. Using scene manager I was easily able to switch to those scene’s and not have one scenes code effect others. Below I have attached an image of some snippets of the scene manager code. I also had various menus with buttons which would lead to new scenes and having scene manager really made the process easier.