(John Cage and Lejaren Hiller working on HPSCHD)

HPSCHD by John Cage, a composer, and Lejaren Hiller, a pioneer in computer music, is one of the wildest musical compositions in the 20th century. Its first performance in 1969 at the Assembly Hall of the University of Illinois at Urbana-Champaign included 7 harpsichord performers, 7 pre-amplifiers, 208 computer-generated tapes, 52 projectors, 64 slide projectors with 6400 slides, 8 movie projectors with 40 movies and lasted for about 5 hours.

(First performance)

Before explaining further, imagine listening to this for five hours.

When I first listened to the piece at MoMA, it felt like a devil was speaking to be, but the composition is actually one of the early examples of randomly generated computer music.

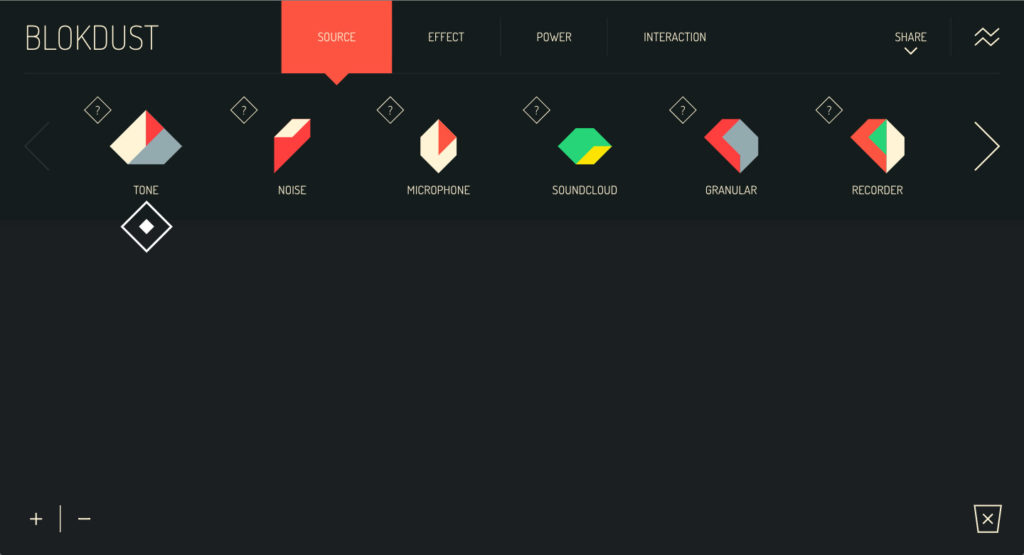

HPSCHD (a contraction of the word ‘harpsichord’ into a computer language) was created in celebration of the centenary of the University of Illinois at Urbana-Champaign in 1967. The prerequisite of the work was to involve the computer in one way or another. Cage did not want the computer to serve simply as an automatic machine that makes his work easier, but he envisioned a process of composition in which the computer becomes an indispensable part.

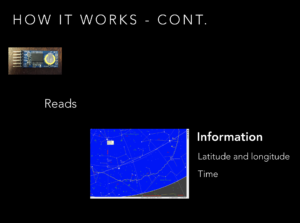

The composition involves up to 7 harpsichord performers and 51 magnetic tapes that are pre-recorded with digital synthesis, which manipulates the pitches and durations of sounds in pieces by Mozart, Chopin, Beethoven, and Schoenberg. The music for a harpsichord is generated by the computer for each performers using Illiac II computer and two software created in Fortran computer language, DICEGAME, and HPSCHD.

DICEGAME is a subroutine designed to compose music for the seven harpsichords. It uses a random procedure created by Mozart called Dice Game which generates random music by selecting the pre-formed musical elements with dices.

The second software, HPSCHD, is responsible for the sounds that are recorded in the tapes. The program synthesized sounds with harmonics that are similar to those of a harpsichord. It used a random procedure from I-Ching or Book of Changes, an ancient Chinese divination text. It divided the octave into 5 ~ 56 parts and calculated each for the 64 choices of I-Ching procedure. I don’t completely understand how it works but that apparently allows 885,000 different pitches to be generated.

Each performance of HPSCHD is supposed to be different, due to randomly generated sound from the tapes and performers, the different number of tapes and performers, and different arrangement of all these parts. A performance can play all of the sounds at once, individually, or somewhere in between. The recorded version above is just one of the infinite number of variations.

Further research:

https://www.jstor.org/stable/3051496?seq=1#page_scan_tab_contents

(An academic journal from University of Illinois Press. You can use your NYU ID to access)

https://www.wnyc.org/story/john-cage-and-lejaren-hiller-hpschd/

(Interesting podcast on HPSCHD)