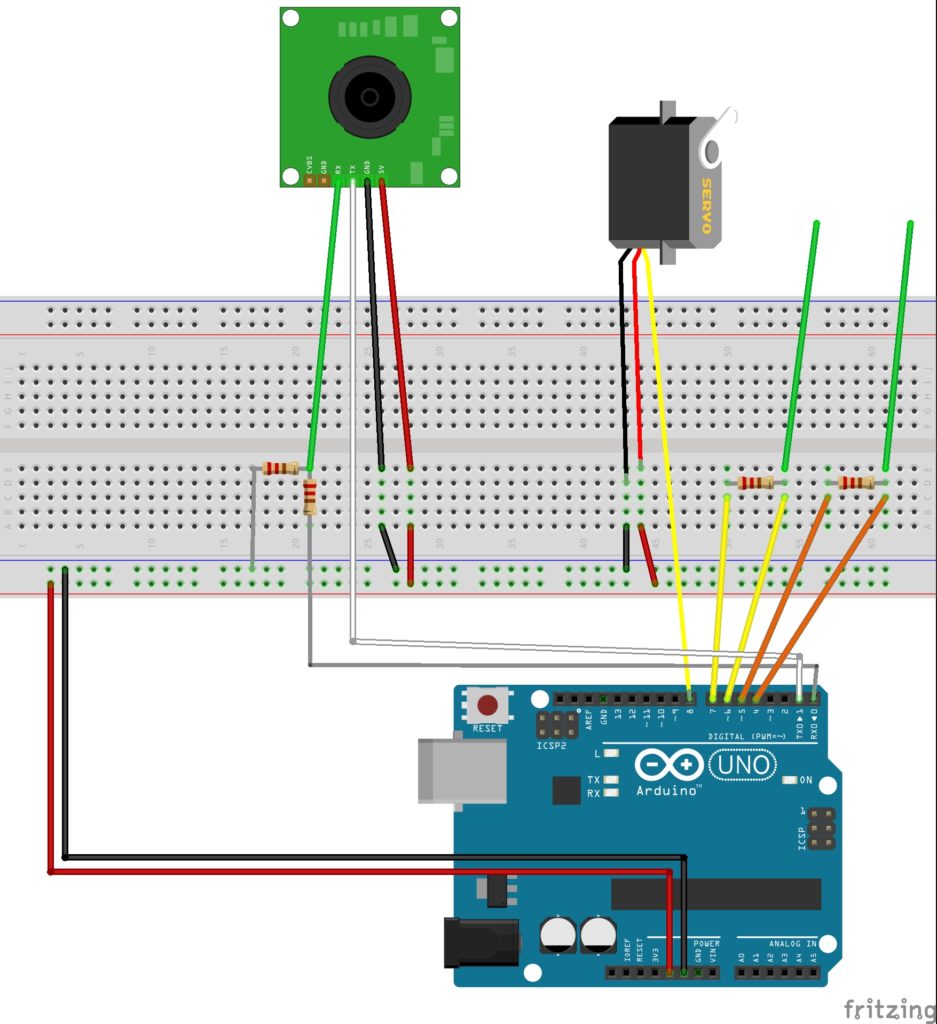

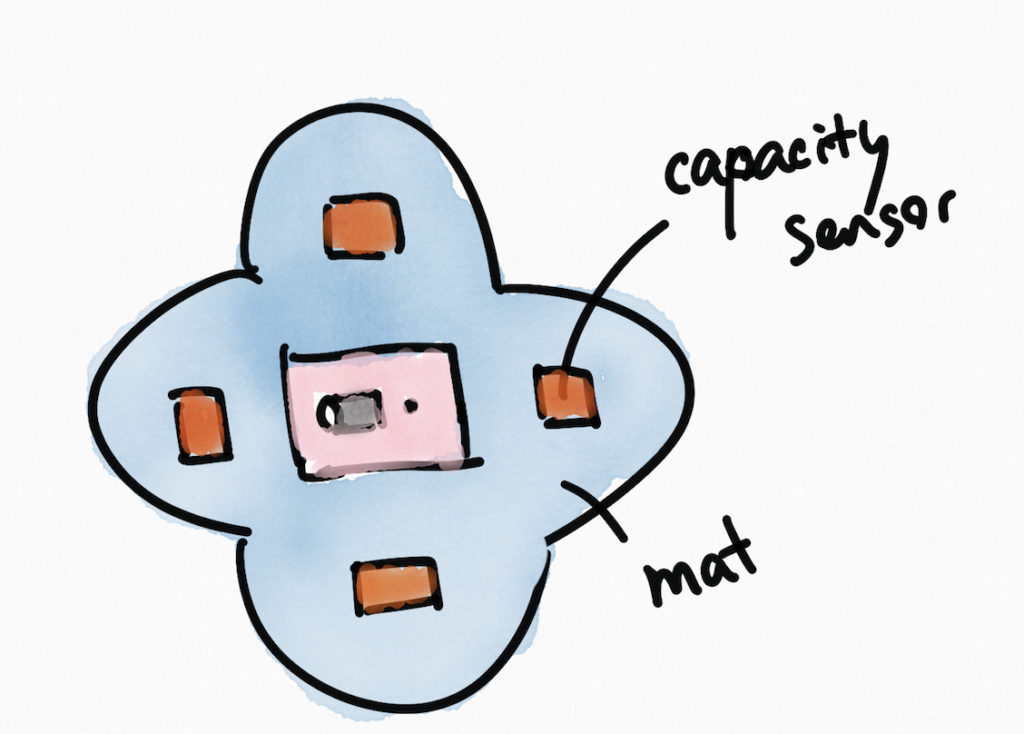

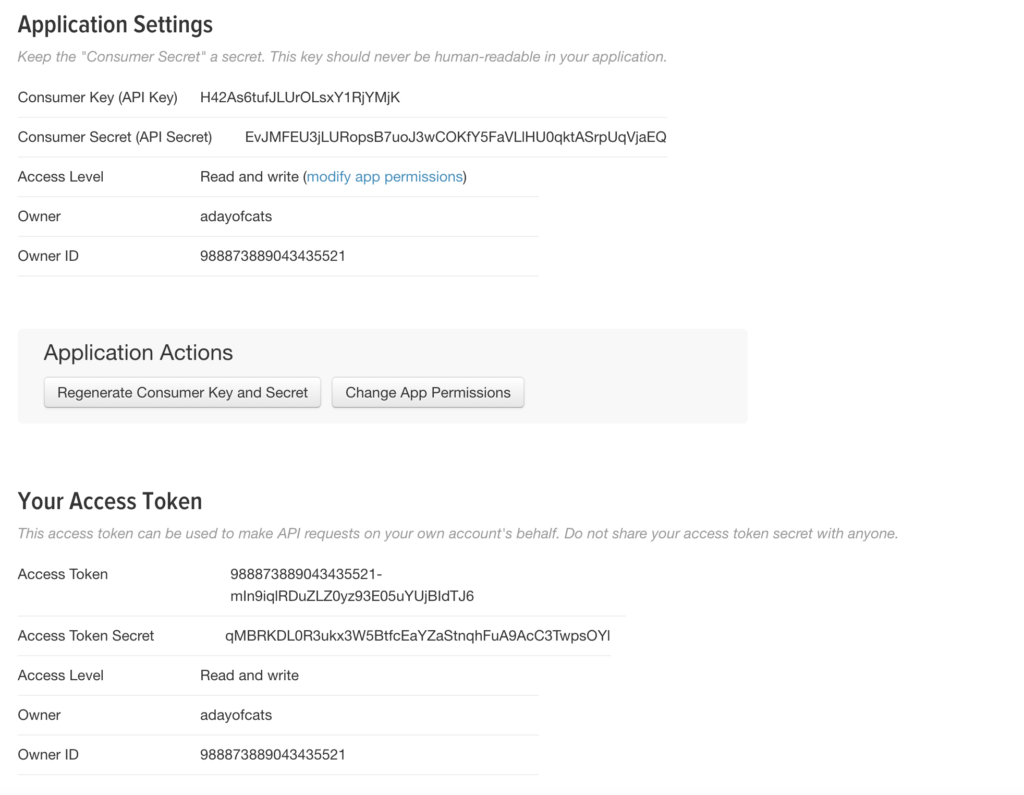

To achieve my project, I need to make capacitive sensor connects to Arduino IDE, then to processing and processing to twitter. There are two libraries to support my code: processing.serial and twitter4j (http://twitter4j.org/en/index.html)

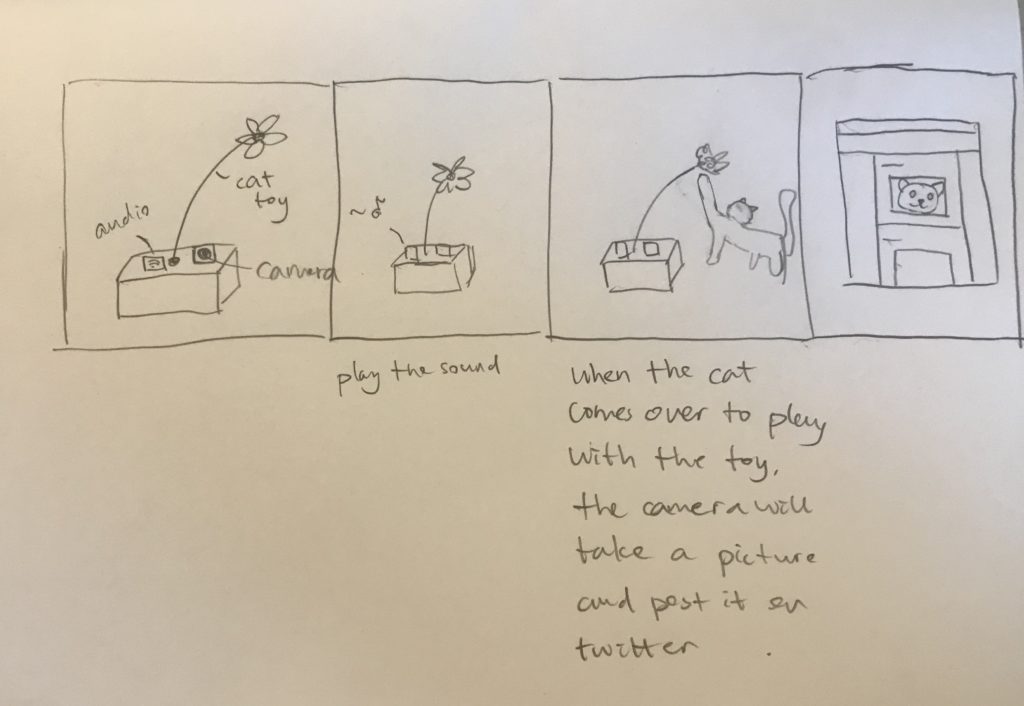

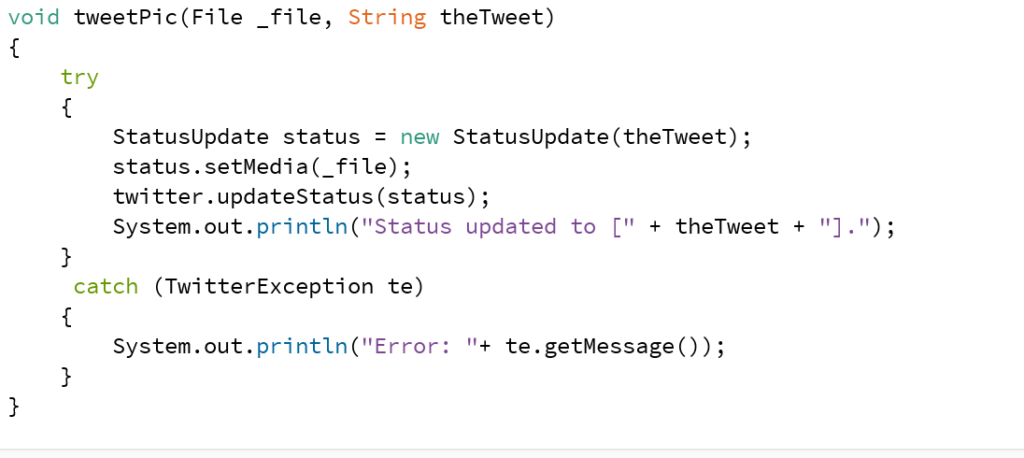

The main function of my project is to get the sensor controls when to send twitter, so I started with setting up Twitter API. I tested with using keypressed to Tweet.

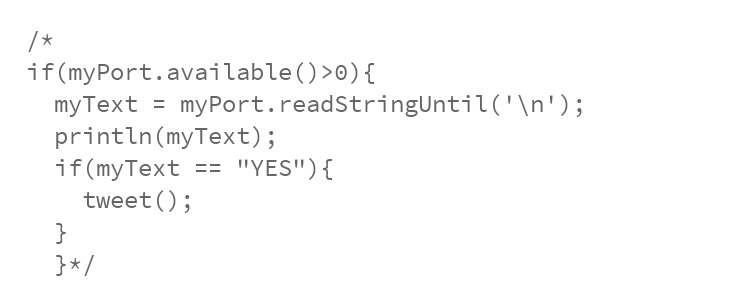

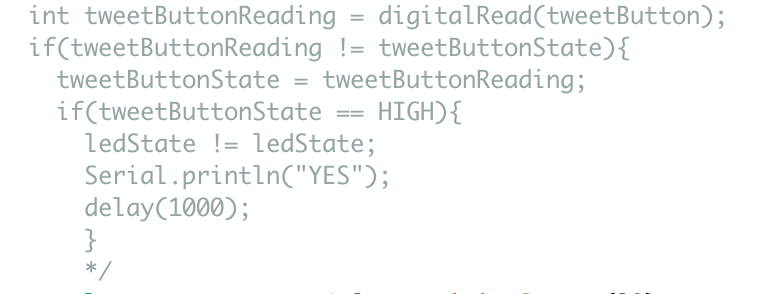

Then I tried to Tweet with a button. Here is the part I got problem with. I made Arduino IDE send a string to processing “YES” everytime the button is pressed. Processing can receive and println in the console but cannot tweet with this command. (I can’t make this work) I think this problem may be caused by the type of data. (It works when I get int values from the capacitive sensor)

After that, I wired up the capacitive sensor. I did the test capacitive sensor first and it works perfectly. But the second time, it wouldn’t show any data in the Arduino IDE. I tried the exact same code and wiring as before but it didn’t work. I changed the resistor, wiring, and breadboard. Luckily it worked in the end.

Paint the box:

Next step:

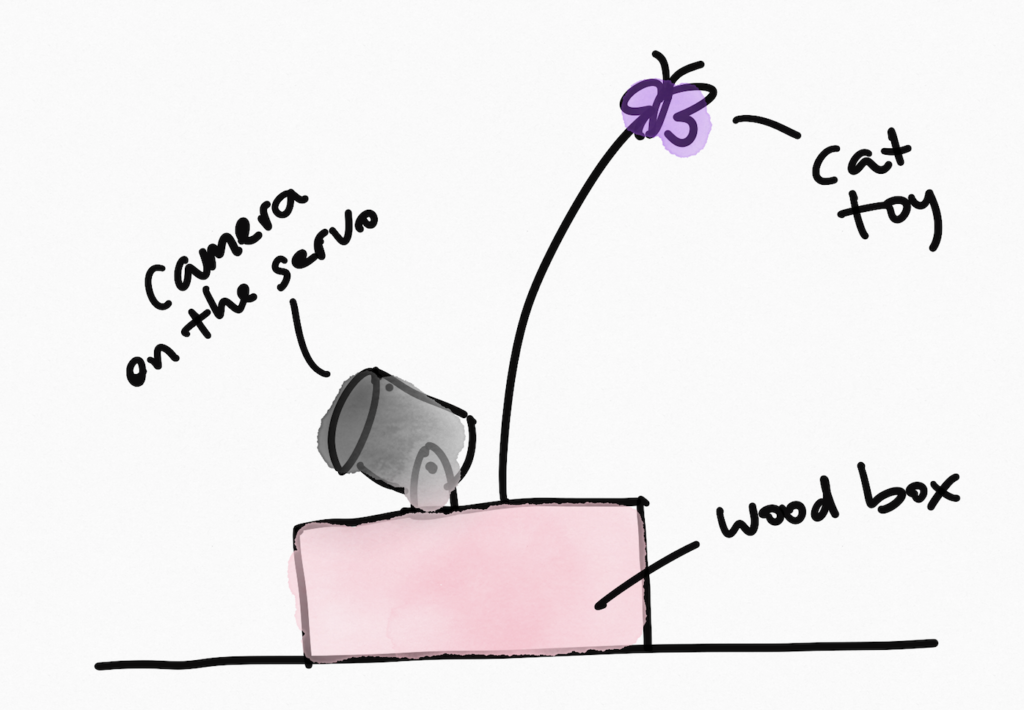

To improve my project, one of the most important parts is to set a limit/constraint to the data received by Arduino. The value now in the processing is a little bit hard to control. Next, I would use the image taken by the webcam to upload on Twitter. I always want to make a stand-alone device and I figure out I can use Xbee to let Arduino connects to processing wirelessly.

code: https://github.com/yueningbai/final_cattoy

test video:

https://vimeo.com/268033313